CLaM-TTS:

Improving Neural Codec Language Modeling for Zero-shot Text-to-Speech

|ICLR 2024|

Abstract. With the emergence of neural audio codecs, which encode multiple streams of discrete tokens from audio, large language models have recently gained attention as a promising approach for zero-shot Text-to-Speech (TTS) synthesis. Despite the ongoing rush towards scaling paradigms, audio tokenization ironically amplifies the scalability challenge, stemming from its long sequence length and the complexity of modelling the multiple sequences. To mitigate these issues, we present CLaM-TTS that employs a probabilistic residual vector quantization to 1) achieve superior compression in the token length, and 2) allow a language model to generate multiple tokens at once, thereby eliminating the need for cascaded modeling to handle the number of token streams. Our experimental results demonstrate that CLaM-TTS is better than or comparable to state-of-the-art neural codec-based TTS models regarding naturalness, intelligibility, speaker similarity, and inference speed. In addition, we examine the impact of the pretraining extent of the language models and their text tokenization strategies on performances.

Model Overview

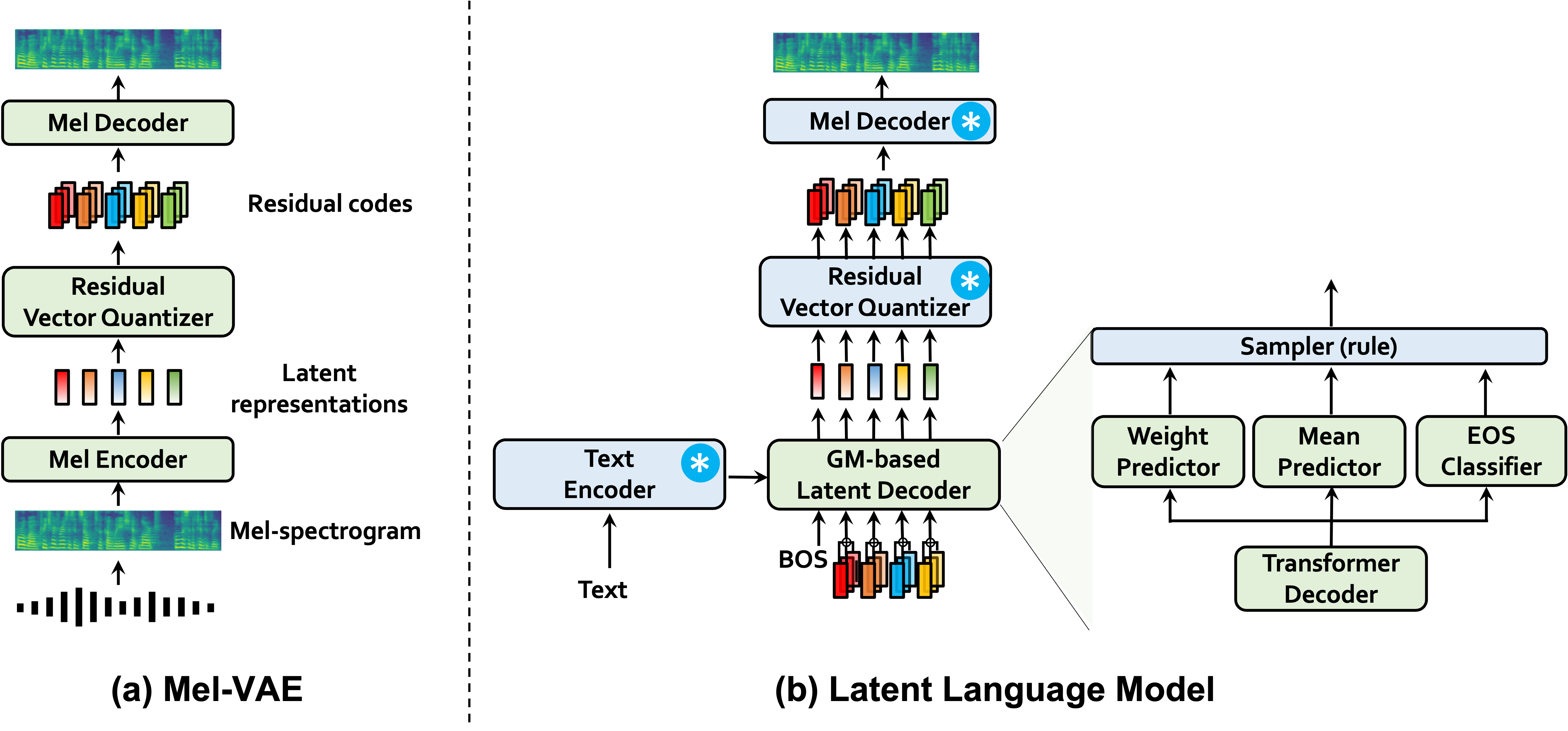

An overview of CLaM-TTS. Training of CLaM-TTS unfolds in two stages: (a) Mel-VAE autoencodes the discrete latent representation from the mel-spectrogram using probabilistic RVQ, (b) Using the trained RVQ from the first-stage, Latent language model, Gaussian mixture (GM) based latent transformer decoder is trained; The decoder aims to predict latent variables that, when quantized, match with the ground-truth audio tokens.

Zero-Shot TTS (LibriSpeech)

Through in-context learning, CLaM-TTS produces speech using a reference audio and its corresponding text. We represent the prompt in blue, and the generated audio in green. The resulting speech closely resembles the reference in voice, background noise, and speaking style. When dividing prompts into 3-second segments, the audio boundaries may not always end in silence and could sometimes cut off words midway. For further details, please see Section 4.2 of our paper. When you click on "Show baselines", you can see the ground truth and baseline audio, etc. YourTTS and Vall-E audios are brought from YourTTS demo1 and Vall-E demo2, respectively.

Non-Zero-Shot TTS (VCTK)

The VCTK dataset is not an unseen dataset for our model, but we provide samples for comparison with other models. We represent the prompt in blue, and the generated audio in green. The resulting speech closely resembles the reference in voice, background noise, and speaking style. When dividing prompts into 3-second segments, the audio boundaries may not always end in silence and could sometimes cut off words midway. When you click on "Show baselines", you can see the ground truth and baseline audio, etc. YourTTS and Vall-E audios are brought from YourTTS demo1 and Vall-E demo2, respectively.

Zero-Shot TTS (Multilingual LibriSpeech)

We represent the prompt in blue, and the generated audio in green. The resulting speech closely resembles the reference in voice, background noise, and speaking style. When dividing prompts into 3-second segments, the audio boundaries may not always end in silence and could sometimes cut off words midway. For further details, please see Section 4.3 of our paper. When you click on "Show baselines", you can see the ground truth and baseline audio, etc.

Celebrities

CLaM-TTS can imitate famous figures' voice and speaking style. Each text is generated via GPT-4 to seamlessly continue the given prompt. We represent the prompt in blue, and the generated audio in green. The resulting speech closely resembles the reference in voice, background noise, and speaking style. When you click on "Show baselines", you can see the ground truth and baseline audio, etc.

Robustness

CLaM-TTS can produce robust speech, evidenced by its low WER. Samples are taken from the Mega-TTS demo1.

| Text | Mega-TTS | CLaM-TTS |

|---|---|---|

| Thursday, via a joint press release and Microsoft speech Blog, we will announce Microsoft’s continued partnership with Shell leveraging cloud, speech, and collaboration technology to drive industry innovation and transformation. | ||

| The great Greek grape growers grow great Greek grapes one one one. |

Speech Diversity

CLaM-TTS can synthesize speech with diverse prosody and timbre from various speakers when generated without a prompt. First four text samples are brought from Vall-E demo1, and the last three are from Gigaspeech test set.

| Text | Sample #1 | Sample #2 | Sample #3 | Sample #4 | Sample #5 |

|---|---|---|---|---|---|

| Because we do not need it. | |||||

| I must do something about it. | |||||

| He has not been named. | |||||

| Number ten, fresh nelly is waiting on you, good night husband. | |||||

| so it’s really for us. it’s kind of like the ah political, social headquarters or center for our community. | |||||

| provided it’s evidence evidence-based and rigorous but, also, i believe, fair. | |||||

| now you you've been with the office in Northern Ireland for ten years. |

Text Prompt

CLaM-TTS can generate voices that match the speaker's meta information provided in the text, a feature we term "text prompting". By utilizing emotion information from the Korean dataset, CLaM-TTS is capable of generating emotional speech as demonstrated below. At times, even without explicit text prompting, the system discerns the text's meaning and outputs appropriately emotional speech. For more details, refer to Appendix B.2 of our paper.

| Text | Sample | |

|---|---|---|

| woman, Happy, 행복하다: | 도련님은 나무토막을 지팡이로 짚고 싱글싱글 웃고 있다. | |

| man, Happy, 행복하다: | 도련님은 나무토막을 지팡이로 짚고 싱글싱글 웃고 있다. | |

| 도련님은 나무토막을 지팡이로 짚고 싱글싱글 웃고 있다. | ||

| woman, Sad, 그립다: | 사랑하는 부인을 얼마 전에 잃었다. | |

| man, Sad, 그립다: | 사랑하는 부인을 얼마 전에 잃었다. | |

| 사랑하는 부인을 얼마 전에 잃었다. | ||

| woman, Angry, 언짢다: | 내가 오디오 좀 켜달라고 했잖아. | |

| man, Angry, 언짢다: | 내가 오디오 좀 켜달라고 했잖아. | |

| 내가 오디오 좀 켜달라고 했잖아. | ||

| woman, Anxious, 두렵다: | 산속, 물소리만 나고 바람이 무서웠습니다. | |

| man, Anxious, 두렵다: | 산속, 물소리만 나고 바람이 무서웠습니다. | |

| 산속, 물소리만 나고 바람이 무서웠습니다. | ||

| woman, Embarrassed, 아찔하다: | 가슴이 두근거리고 팔이 후둘후둘했다. | |

| man, Embarrassed, 아찔하다: | 가슴이 두근거리고 팔이 후둘후둘했다. | |

| 가슴이 두근거리고 팔이 후둘후둘했다. | ||

| woman, Hurt, 섭섭하다: | 몇 번이나 이렇게 한탄을 했었다. | |

| man, Hurt, 섭섭하다: | 몇 번이나 이렇게 한탄을 했었다. | |

| 몇 번이나 이렇게 한탄을 했었다. | ||

Comparison with LibriSpeech Zero-Shot Demo Cases

Our samples have been downsampled to 16kHz for comparison.

Demos for each model can be found at their respective links:

Mega-TTS,

NaturalSpeech2,

VALL-E

| Text | Prompt | Ground Truth | Mega-TTS | NaturalSpeech 2 | VALL-E | CLaM-TTS |

|---|---|---|---|---|---|---|

| They moved thereafter cautiously about the hut groping before and about them to find something to show that Warrenton had fulfilled his mission. | — | — | ||||

| And lay me down in thy cold bed and leave my shining lot. | — | |||||

| Number ten, fresh nelly is waiting on you, good night husband. | — | — | ||||

| Yea, his honourable worship is within, but he hath a godly minister or two with him, and likewise a leech. | ||||||

| Instead of shoes, the old man wore boots with turnover tops, and his blue coat had wide cuffs of gold braid. | — | |||||

| The army found the people in poverty and left them in comparative wealth. | ||||||

| Thus did this humane and right minded father comfort his unhappy daughter, and her mother embracing her again, did all she could to soothe her feelings. | — | |||||

| He was in deep converse with the clerk and entered the hall holding him by the arm. | — | |||||

| Indeed, there were only one or two strangers who could be admitted among the sisters without producing the same result. | — | — | ||||

| For if he's anywhere on the farm, we can send for him in a minute. | — | — | ||||

| Their piety would be like their names, like their faces, like their clothes, and it was idle for him to tell himself that their humble and contrite hearts it might be paid a far-richer tribute of devotion than his had ever been. A gift tenfold more acceptable than his elaborate adoration. | — | — | ||||

| The air and the earth are curiously mated and intermingled as if the one were the breath of the other. | — | — | ||||

| I had always known him to be restless in his manner, but on this particular occasion he was in such a state of uncontrollable agitation that it was clear something very unusual had occurred. | — | — | ||||

| His death in this conjuncture was a public misfortune. | — | — | ||||

| It is this that is of interest to theory of knowledge. | — | — | ||||

| For a few miles, she followed the line hitherto presumably occupied by the coast of Algeria, but no land appeared to the south. | — | — |